Creating a Scalable Graphite Cluster

7/Nov 2014

There are a few decent posts on the internet about clustering graphite. Most examples I found were generally straight forward, but none of them addressed metric aggregation. My employer requires aggregation for various subsets of metric data. So be it. The write intensive ssd graphite servers in a mirrored configuration were bogged down from i/o saturation to the point of failing to render requests. We were also approaching the impending doom of storage constraints.

Metrics don’t have any value if you can’t ingest the data. Nothing like forced motivation to make something work.

My goal was to migrate to a real cluster without painting myself into another corner. I needed something that could scale regardless if i/o, cpu, memory, network or storage becomes an issue. I required data redundancy. I wanted a setup that is going to be a no-brainer to manage the next time I have to add a new node, make a part scale to address QoS issues or restore missing metrics. Luckily all the hard work has already been done for me, thanks to carbonate, a suite of of tools to manage graphite clusters. The only hurdle left was to deal with aggregation. After I wasted way too much time fighting this, someone smarter than me quickly noticed that carbonate does not consider aggregation and only supports the normal consistent hash ring. Metrics were breaking the hash ring and being flagged as not belonging to the nodes they landed on. I started to go down the path of patching carbonate to use AggregatedConsistentHashingRouter when I decided that was a horrible choice. Any time you can avoid supporting 3rd party tools instead of tracking upstream you should.

My goal was to migrate to a real cluster without painting myself into another corner. I needed something that could scale regardless if i/o, cpu, memory, network or storage becomes an issue. I required data redundancy. I wanted a setup that is going to be a no-brainer to manage the next time I have to add a new node, make a part scale to address QoS issues or restore missing metrics. Luckily all the hard work has already been done for me, thanks to carbonate, a suite of of tools to manage graphite clusters. The only hurdle left was to deal with aggregation. After I wasted way too much time fighting this, someone smarter than me quickly noticed that carbonate does not consider aggregation and only supports the normal consistent hash ring. Metrics were breaking the hash ring and being flagged as not belonging to the nodes they landed on. I started to go down the path of patching carbonate to use AggregatedConsistentHashingRouter when I decided that was a horrible choice. Any time you can avoid supporting 3rd party tools instead of tracking upstream you should.

The flexibility of the carbon daemons made for an easy solution. Move all aggregation out front before metrics even reach the cluster. At first glance I didn’t like the idea because I know there are plenty of spare cpu cycles on the nodes in the cluster. If you’re really that worried about idle cores you could send metrics to aggregators on the nodes with a central destination, but that just feels dirty and wrong. It would reintroduce a layer of complexity we just removed from the cluster and make network graphs or documentation impossible to decipher. If you build something nobody else can figure out how it works when you’re gone then you have failed and should be smacked in the face. Twice.

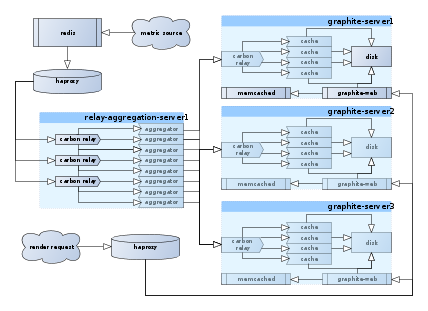

Here is a brief overview of the solution that meets my requirements and fulfills my personal goals.

Metric sources first feed into redis. This allows us to drop the cluster for maintenance without data loss. From redis we pass data to haproxy which is easy to configure on it’s own, and trivial with the help of the puppetlabs-haproxy module. HAProxy load balances to multiple carbon-relay processes. The carbon relay processes use the aggregated consistent hashing algorithm to forward specific metrics to the same aggregation process. If it meets an aggregation rule then voodoo happens and more metrics are created, otherwise the metric just gets forwarded. The aggregators are configured with a replication factor of 2, so every metric leaving and aggregation pid gets forwarded to 2 nodes in the cluster meeting our redundancy requirements. On paper this looks similar to the federated design you may have stumbled on. The difference is this actually supports storage redundancy within the cluster. All carbon-aggregation and carbon-cache pids are bound to localhost. The only elements of the cluster that needs to be aware of other nodes is the destination list for the carbon-aggregator pool and the cluster_servers setting in graphite-web.

This set up will thin out resource demands and allow every piece of the puzzle to scale to most real world scenarios.